At Krux, we are addicted to metrics. Metrics not only give us the insight to diagnose an anomaly, but to make good decisions on things like capacity planning, performance tuning, cost optimizations, and beyond. The benefits of well-instrumented infrastructure are felt far beyond the operations team; with well-defined metrics, developers, product managers, sales and support staff are able to make more sound decisions within their field of expertise.

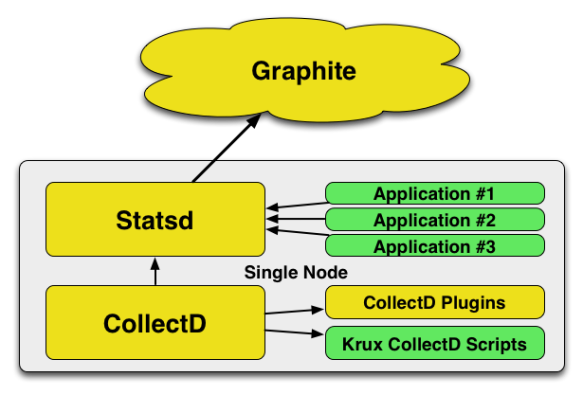

For collecting and storing metrics, we really like using Statsd, Collectd and Graphite. We use collectd to gather system information like disk usage, cpu usage, and service state. Statsd is used by collectd to relay its metrics to graphite, and any applications we write stream data directly to statsd as well. I cover this topic in-depth in a previous article.

The real power of this system comes from automatically instrumenting all your moving parts, so everything emits stats as a part of normal operations. In an ideal world, your metrics are so encompassing that drawing a graph of your KPIs tells you everything you need to know about a service, and digging into logs is only needed in the most extreme circumstance.

Instrumenting your infrastructure

In a previous article, I introduced libvmod-statsd, which lets you instrument Varnish to emit realtime stats that come from your web requests. Since then, I’ve also written an accompanying module for Apache, called mod_statsd.

Between those two modules, all of our web requests are now automatically measured, irrespective of whether the application behind it sends its own measurements. In fact, you may remember this graph that we build for every service we operate, which is composed from metrics coming directly from Varnish or Apache:

As you can see from the graph, we track the amount of good responses (HTTP 2xx/3xx) and bad responses (HTTP 4xx/5xx) to make sure our users are getting the content they ask for. On the right hand side of the graph, we track the upper 95th & 99th percentile response times to ensure requests come back in a timely fashion. Lastly, we add a bit of bookkeeping; we keep track of a safe QPS threshold (the magenta horizontal line) to ensure there is enough service capacity for all incoming requests, and we draw vertical lines whenever a deploy happens or Map/Reduce starts, as those two events are leading indicators of behavior change in services.

But not every system you are running will be a web server; there’s process managers, cron jobs, configuration management and perhaps even cloud infrastructure to name just a few. In the rest of this article, I’d like to highlight a few techniques, and some Open Source Software we wrote, that you can use to instrument those types of systems as well.

Process management

Odds are you’re writing (or using) quite a few daemons as part of your infrastructure, and that those daemons will need to be managed somehow. On Linux, you have a host of choices like SysV, Upstart, Systemd, daemontools, Supervisor, etc. Depending on your flavor of Linux, one of these is likely to be the default for system services already.

For our own applications & services, we settled on Supervisor; we found it to be very reliable and easy to configure in a single file. It also provides good logging support, an admin interface, and monitoring hooks. There’s even a great puppet module for it.

The one bit we found lacking is direct integration with our metrics system to tell us what happened to a service and when. For that purpose I wrote Sulphite, which is a Supervisor Event Listener; Sulphite emits a stat to Graphite every time a transition in service state happens (for example, from running to exited). This lets us track restarts, crashes, unexpected downtime and more on as part of our service dashboard.

You can get Sulphite by installing it from PyPi like this:

$ pip install sulphite

And then configure it as an Event Listener in Supervisor like this:

[eventlistener:sulphite] command=sulphite --graphite-server=graphite.example.com --graphite-prefix=events.supervisor --graphite-suffix=`hostname -s` events=PROCESS_STATE numprocs=1

Configuration management

Similarly, you’re probably using some sort of configuration management system like Chef or Puppet, especially if you have more than a handful of nodes. In our case, we use Puppet for all of our servers, and as part of its operations, it produces a lot of valuable information in report form. By default, these are stored as log files on the client & server, but using the custom reports functionality, you can send this information on to Graphite as well, letting you correlate changes in service behavior to changes made by Puppet.

We open sourced the code we use for that and you can configure Puppet to use it by following the installation instructions and creating a simple graphite.yaml file that looks something like this:

$ cat /etc/puppet/graphite.yaml --- :host: 'graphite.example.com' :port: 2023 :prefix: 'puppet.metrics'

And then updating your Puppet configuration file like this:

[master]

pluginsync = true

report = true

reports = store,graphite

[agent]

pluginsync = true

report = trueProgramming language support

One of the best things you can do to instrument your infrastructure is to provide a base library that includes statistics, logging & monitoring support for the language or language(s) your company uses. We’re big users of Python and Java, so we created libraries for each of those. Below, I’ll show you the Python library, which we’ve open sourced.

Our main design goal was to make it easy for our developers to do the right thing; by the very nature of using the base library, you’d get convenient access to patterns and code you wouldn’t have to write again, but it would also provide the operators with all the knobs & insights needed to run it in production.

The library comes with two main entry points you can inherit from for apps you might want to write. There’s krux.cli.Application for command line tools, and krux.tornado.Application for building dynamic services. You can install it by running:

$ pip install krux-stdlib

Here’s what a basic app might look like built on top of krux-stdlib:

class App(krux.cli.Application):

def __init__(self):

### Call to the superclass to bootstrap.

super(Application, self).__init__(name = 'sample-app')

def run(self):

stats = self.stats

log = self.logger

with stats.timer('run'):

log.info('running...')

...

The ‘name’ argument above uniquely identifies the app across your business environment; it’s used as the prefix for stats, it’s used as the identifier in log files, as well as its name in the usage message. Without adding any additional code (and therefor work), here’s what is immediately available to operators:

$ sample-app -h

[…]

logging:

--log-level {info,debug,critical,warning,error}

Verbosity of logging. (default: warning)

stats:

--stats Enable sending statistics to statsd. (default: False)

--stats-host STATS_HOST

Statsd host to send statistics to. (default: localhost)

--stats-port STATS_PORT

Statsd port to send statistics to. (default: 8125)

--stats-environment STATS_ENVIRONMENT

Statsd environment. (default: dev)

Now any app developed can be deployed to production, with stats & logging enabled, like this:

$ sample-app.h --stats --log-level warning

Both krux.cli and krux.tornado will capture metrics and log lines as part of their normal operation, so even if developers aren’t adding additional information, you’ll still get a good baseline of metrics just from using this as a base class.

AWS costs

We run most of our infrastructure inside AWS, and we pride ourselves on our cost management in such an on-demand environment; we optimize every bit of our infrastructure to eliminate waste and ensure we get the biggest bang for our buck.

Part of how we do this is to track Amazon costs as they happen in realtime, and cross-correlate them to the services we run. Since Amazon exposes your ongoing expenses as CloudWatch metrics, you can programmatically access them and add them to your graphite service graphs.

Start by installing the Cloudwatch CLI tools and then, for every Amazon service you care about, simply run:

$ mon-get-stats EstimatedCharges

--namespace "AWS/Billing"

--statistics Sum

--dimensions "ServiceName=${service}"

--start-time $date

You can then send those numbers to Statsd using your favorite mechanism.

Further reading

The tips and techniques above are more detailed examples from a presentation I’ve given about measuring a million metrics per second with minimal developer overhead. If you find the above tips and techniques interesting, there are more available in the slideshow below:

Excellent work, Jos! I saw your Puppet Camp Berlin presentation and you’ve made tooling an infrastructure for statistics seem effortless.

Could you elaborate more about how collectd communicates with statsd in the first diagram? I’ve gotten collectd to write directly to graphite, but I’ve not tried relaying through statsd.

I was just about to ask the same question…

The graphic and text say that collectd writes to statsd and statsd writes to graphite. How do you configure collectd to write to statsd???

Great article! Thanks!

Ping! Same question here. There’s a collectd plugin to *receive* data from statsd, but not to send.

My conclusion was to skip statsd and have collectd send directly to graphite. This seems to be working fine. Still very curious how/why you put statsd in the middle.

We’ve developed the tool to write collectd metrics to statsd together with our friends at Lyft; it turns out it wasn’t publicly available before, but it is now: https://github.com/lyft/collectd-statsd

I hope this will be useful to you all!

Cool! Can you explain why this is better than just sending from collectd to graphite directly? Something about the aggregation features statsd adds?

Hi,

I would like to propose the link exchange deal with your website jiboumans.wordpress.com, for mutual benefit in getting more traffic and improve search engine’s ranking – absolutely no money involve.

We will link to you from our blog – https://www.souledamerican.com/, from its homepage’s sidebar. In return you will agree to do the same to link back to one of our of our site, from your jiboumans.wordpress.com’s homepage too (sidebar, footer, or anywhere on your homepage), with our brand name.

If you are interested, kindly reply to this email.

Thank you,

Pauline